How does the brain work and how can we understand it? To view this big question from a broad perspective, I’m reporting some ideas about the brain that marked me most during the past twelve months and that, on the other hand, do not overlap with my own research focus. Enjoy the read! And check out previous year-end write-ups: 2018, 2019, 2020, 2021, 2022, 2023.

If you want to understand the brain, should you work as a neuroscientist in the lab? Or teach the subject for students? Or simply read textbooks and papers while having a normal job? In this blog post, I will share some thoughts onthis topic.

1. Why do I do research?

There are three reasons why I’m doing research in neuroscience:

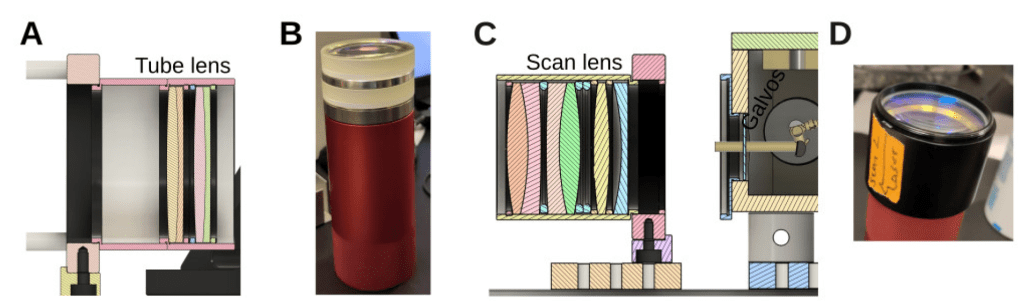

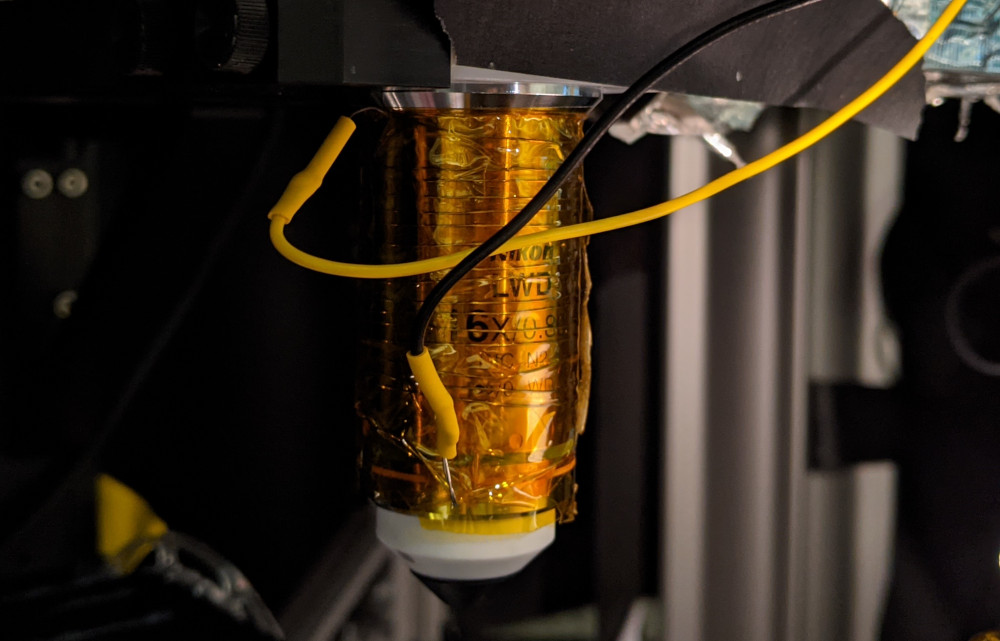

First, I like this job and what it entails: working with technology, building microscopes, working with animals, coding, analyzing data, and exploring new things every week. If you are familiar with this blog, you probably know how much I like these aspects of my work.

Second, with my research I want to contribute to the basic knowledge about the brain within the science community. I believe that this deepened basic understanding of the brain will ultimately have positive impact on our society, how we see ourselves and how we treat our own and other minds during health and disease. In this line of thought, I see myself as a servant to the public.

Third, and this is the focus of today’s blog post, doing research in neuroscience also enables me to increase and improve my own understanding of the cellular events in the brain, of thought processes, and of life in general. This is what drew me to science in the beginning.

In contrast to this last point, the work as a researcher embedded into the science machinery of the 21st century tends to be focused on something else, and for understandable reasons. Most of the daily work of scientists is focused on making discoveries, on having impact, and on being seen as successful. It almost seems a natural idea to believe that making discoveries is the same as better understanding the brain. And although discoveries might be the best way to increase the overall insight into the brain for humanity, it might not be the best way to increase your own understanding of the brain.

2. Understanding by reading from others (“passive” research)

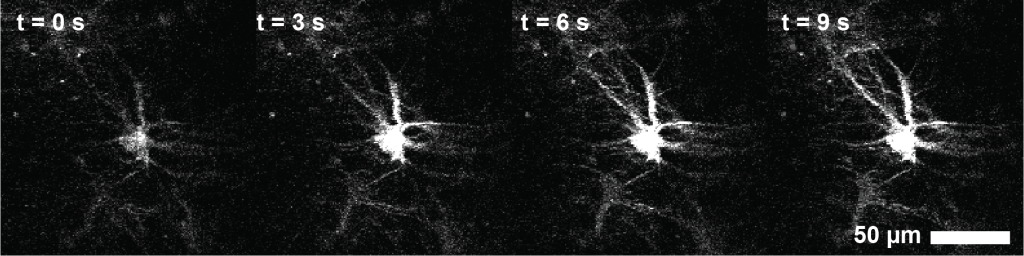

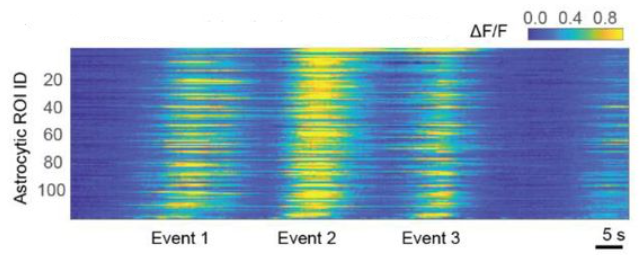

One of the tasks that kept me most busy in early 2024 was the final preparations for our publication on centripetal propagation of calcium signals in astrocytes. I still believe that this is my most important contribution to science so far, and I do think that I learnt much during this research project. However, the final steps of publication consisted of rather tedious interactions with copy editors, formatting of figures and file types, preparing journal cover suggestions, as well as advertisement of the work through discussions, talks or public announcements. All of this may be helpful for my career and useful for the potential audience, and can be fun as well, but certainly does not advance my own understanding of the brain. I felt as though I was treading water – being very busy and working hard, yet at the same time, I got the impression that I had temporarily lost touch with the heart of research.

At the same time, I had to step in on short notice to give a lecture to medical students about the motor system, covering cortex, basal ganglia, cerebellum and brainstem. Since I didn’t find the existing lecture materials fully satisfying, I began researching the topic myself. Coming from physics and having been interested in the principles of neuroscience like the dynamics of recurrent networks or principles of dendritic integration rather than in the anatomical details, I had never fully had a firm grasp of the motor system. Now, I was forced to understand the connections between the motor cortex, cerebellum, and basal ganglia, and their effect on brainstem and spinal cord, in just a few days. What role do the “indirect” and “direct” pathway play in the striatum, and how are they influenced by dopamine? What is the opinion of current research about these established ideas? What role does the cerebellum play? How should we treat David Marr’s idea of the cerebellum? What is currently known about the plasticity of Purkinje cells in the cerebellum? My understanding of these topics had been superficial at best. The challenge of delivering a two-hour lecture on this topic – and being able to answer any question from students – forced me to seriously think about these topics.

After an intense weekend that had been full of struggles, textbooks and review papers, not only had I prepared an acceptable lecture, but I had also made real progress in my personal understanding of how the brain works. It wasn’t directly relevant to my own research, but over the following months, I noticed that far more studies from the field of motor systems suddenly caught my attention – because now I understood better what they were about. Retrospectively, I could now also better understand the work on the brain stem done in the neighboring lab of Silvia Arber during my PhD.

Therefore, the most important progress in my understanding of the brain during this time in early 2024 didn’t come from my own active research or from a conference where I could catch up on the latest developments. Instead, it came from a weekend when I was forced to dive into a topic slightly outside of my comfort zone. Let’s call this “passive” research, as it did not involve my own lab activities but ‘only’ researching the conclusion from other scientists.

If I wanted to really understand the brain, wouldn’t it make sense to work this way more often? That is, instead of spending five years applying my time and expertise on a narrowly defined scientific question, shouldn’t I first aim to better comprehend the available knowledge?

3. The limitations of a “passive” research to understanding the brain

A few years ago (around 2007-2009), I had already tried to understand the brain in such a “passive” way: by systematically absorbing and mapping out the existing knowledge, while being myself not yet an active researcher. At the time, I was in the middle of my physics studies. Physics itself wasn’t my main interest but rather a means to learn the methods and ways of thinking that are necessary to penetrate neuroscience and ultimately understand the brain. At the same time, however, I realized that I lacked some basic knowledge in neuroscience. For example, I had some notions about the different brain regions, but these notions were very vague and consisted of not much more than names.

To change this lack of knowledge, I started a project that I called “The brain on A4” (A4 is a paper page format similar to the US letter). The idea was to search the literature for a given brain region, summarize the findings, and condense them onto a single A4 page. My vision was to eventually wallpaper my room with these 100 A4 pages so that the summarized facts and essential insights about all relevant brain regions would always be present as anchor points for my thought processes. This way, they would gradually sink into my mind and provide the foundation for a deeper understanding through reflection and the integration of new thoughts.

For illustration, here are two pages of the “Brain on A4” project, written in German (with a few French textbook excerpts included since I was studying also at a French university then).

In short, the idea didn’t work. Using this approach, I covered some brain regions, and when I read through these A4 drafts today, they don’t seem completely off base. But the concepts that are now familiar to me and connected to other topics had only vague meanings back then when I copied them from Wikipedia, Scholarpedia or review articles that took me several days to go through. I could recall the keywords and talk about them, but I couldn’t truly grasp them when I put myself to the test.

Why? Because knowledge must grow organically. It needs both contextual embedding and emotional anchorage. This embedding can be a discussion partner, or a project where this knowledge is applied or tested, or, at the minimum, it can be the exam at the end of the semester where the knowledge is finally “applied”.

In addition, I was also lacking the toolset to probe the knowledge. Different from maths, physics or foreign languages, the knowledge about the brain comes in vague and confusing sentences that are difficult to evaluate. That is, for most of the statements of the brain, it is difficult to say whether it is indeed true or what it means. The equation for potential energy E = m·g·h can fully understood by derivations or examples. On the other hand, the statement “The major input to the hippocampus is through the entorhinal cortex” (source) only makes sense (if at all) when the entorhinal cortex is well-understood (which is not the case). In addition, neuroscientific publications are full of wrong conclusions and overinterpretations. For example, if I randomly take the latest article about neuroscience published in Nature, I get this News article of a research article by Chang et al. . The main finding of the study is indeed interesting and worthwhile reporting: recent memories are replayed and therefore consolidated during special sleep phases of mice where the pupil is particularly small. The News article, however, stresses that this segregation of recent memories into distinct phases may prevent an effect called catastrophic forgetting, when existing memories are overwritten because they use the same synapses. This interpretation, however, is quite misleading. Catastrophic forgetting and the finding of this study are vaguely connected but not closely linked, which becomes quite clear after reading the wikipedia page on catastrophic forgetting. As a lay person, it is almost impossible to understand that this tiny part of the discussion, which prominently featured in the subheadings of the News article, is only a vague connection that does not really reflect the core experimental findings of the study.

Similarly, when I made the A4-summaries, I meticulously listed the inputs and outputs of brain regions. But what could I make out of the fact that the basal ganglia receives input from cortex, substantia nigra, raphe and formatio reticularis (as in my notes above), if all of those brain areas were similarly defined as receivers of input from many other areas? Back then in 2008, I wrote about the cortico-thalamic-basal ganglia loops and how they enable gating (see screenshot above), but only when I worked through the details again with a broader knowledge base 16 years later, I managed to see the context where I could embed the facts and make them stick in my memory. And it took me this many years working in neuroscience to slowly grow this context.

4. The limitation of a systematic approach to understanding the brain

A second reason why this approach for mapping the brain to A4 pages didn’t work may have been its systematic nature. A systematic approach is often useful, especially when coordinating with collaborators, or when orchestrating a larger project. However, I’ve come to believe that a more organic – or even chaotic – approach can be more effective, especially when it comes to understanding something. A systematic approach assumes that you can determine in advance what needs to be done, in order to faithfully follow this structure afterwards. For the A4 project, the systematic structure was the division into “brain regions.” Of course, the brain can not be understood by just listing the properties of brain regions; many important concepts take place on a different level, between brain regions, or on the cellular level. I noticed the limitations of my approach myself soon enough and added A4 pages on other topics that I deemed relevant like “energy supply to the brain” and “comparison with information processing in computers.” The project lost its structure, for good reason. And soon after, before the structure became completely chaotic, I abandoned the project entirely.

One thing that I learnt from this failure is how a systematic approach can sometimes hinder understanding. In formal education, this truth is often hidden because curricula and instructors provide the structuring of knowledge already. But when it comes to acquiring new knowledge and insight, rather than merely processing and assembling pre-existing knowledge, this systematic approach must be continuously interrupted and re-invented to enable real progress. When I first heard about the hermeneutic circle, invented by hermeneutics (the science of understanding a text), I had the impression that this concept was an accurate description of the process of understanding. Following the hermeneutic circle, deeper understanding is approached not on a direct path but iteratively, by drawing circles around the object of understanding, maybe constructing a web of context and possibly an eventual path towards the goal. In this picture, the process of understanding is diffuse and unguided, and is corrected only occasionally by counscious deliberation and a more systematic mind. As a consequence, the object of interest can only be treated and laid down systematically once its understanding has been reached, but not on the way to this point.

5. The limitation of a non-systemic approach to understanding the brain

However, the unsystematic approach to understanding the brain has a major drawback: you lose the sense of your own progress, and you lose the overview of the whole. Often, progress is incremental and, over years, so slow that you hardly notice you’ve learned something new – leading to a lack of satisfaction. And, even more importantly, you lose the big picture.

This may also be one of the greatest barriers to understanding the brain: the possible inability of our minds to comprehend the big picture of such a complex system where different scales are so intricately intertwined. A few years ago, I wrote a blog post about this topic (“Entanglement of temporal and spatial scales in the brain, but not in the mind”), which I still find relevant today. Can we, as humans with limited information-processing capacity and working memory, understand a complex system? Or more precisely: what level of understanding is possible with our own working tool – the brain -, and what kind of understanding lies beyond our reach?

Recently, Mark Humphries wrote a blog post to address a similar question. He speculated that the synthesis and understanding of scientific findings may, in the future, no longer be carried out by humans but by machines – for example, by a large language model or a machine agent tasked with understanding the brain. Personally, I find this scenario plausible but not desirable. An understanding of the brain by an artificial agent that is beyond my own ability may be practically useful, but it doesn’t satisfy my scientific curiosity. Therefore, I believe that we should focus on how to support our own understanding in its chaotic nature and, perhaps retrospectively, wrest structure and meaning from this chaos. How? By writing for yourself.

6. The importance of writing things up for yourself

As I mentioned earlier, I believe that understanding the brain is a chaotic and iterative process that does not proceed systematically or in a predictable trajectory. Instead, it involves trying out different approaches and constantly adopting new perspectives. For me, these approaches include reading textbooks and preparing lectures; reading and summarizing current papers; and conducting my own “active” research in the lab and in silico.

During this process, I found that shifting one’s perspective can be particularly helpful in gaining a better understanding. To gain such new perspectives, I regularly read open reviews, which often present a picture different from the scientific articles themselves. Or, I like to explore new, ambitious formats that shake off the dust of the traditional publication system and attempt to take a more naive view of specific research topics. A venue that is still fresh in spirit and that I can recommend for this purpose is thetransmitter.org.

However, the best method to adopt knowledge and integrate it into one’s own world model is by processing the knowledge in an active manner. The two methods I find most useful are mind maps and writing. Usually, I use mind maps when I’m completely confused, either about the direction of a project, or about my approach to neuroscience in general. Usually, I just start with a single word in the center of a paper within a circle and then add thoughts in an associative manner for 20-30 minutes. The result of this process is not necessarily useful for others. However, seeing the keywords laid out before me, I can often see the missing links or identify things that should be grouped together, or grouped differently.

Below is an example of a such mind-map. I drew this map in 2016 a bit more than two years into my PhD, at a stage where I was quite struggling to shape my PhD project. Unlike most of my mind maps which are hand-drawn and therefore almost illegible to others, this one was drawn on a computer (in adobe illustrator, to play around with the program). I was brain-storming about the role of oscillations in the olfactory bulb of zebrafish (check out this paper if you’re interested). Although I did not follow up on this project, some of the ideas are clearly predecessors of analyses in my main PhD paper on the olfactory cortex homolog in zebrafish. The mind-map is basically a loosely connected assembly of concepts and ideas that had been living in my thoughts, often inspired by some reading of computational neuroscience papers or by discussions with Rainer Friedrich, my PhD supervisor. I used this map to visualize, re-group and connect these ideas:

The second method is writing, and I believe that it is the only true method to really understand something. In contrast to reading, writing is an active process, and so much more powerful in embedding and anchoring knowledge in your own mind. You may have heard of the illusion of explanatory depth, the tendency of our brain to trick us into thinking we understand something simply because we’ve heard about it or can name it. Only when we attempt to explain or describe a concept, we realize how superficial our thoughts were and how shaky our mental models really are. Writing is a method for systematically destroying these ill-founded mental structures. (Expressing an idea in mathematical or algorithmic terms is even more precise and therefore even better for this purpose!) When we have destroyed such an idea, we shouldn’t mourn the loss of a seemingly brilliant concept but instead celebrate the progress we’ve made in refining our understanding.

In addition, writing has always been a form of storytelling. By putting our understanding of the brain into words – even if those words are initially fragmented, scattered, and contradictory – writing seeks to find meaning, identify patterns, and embed details into a larger whole. With a bit of practice, writing does all of this for you.

Importantly, I’m not talking about writing papers or grant proposals here. In those cases, you have a clear audience in mind (editors or reviewers) and eventually tailor your writing to meet their expectations. And you will be happy and satisfied when you produce something that meets the standards for publication. Instead, I’m talking about writing for oneself. This mode of writing confronts your own critical voice and follows ideas without regard for how the text would look like. And I believe that this way of writing, which is not directly rewarded by supervisors or reviewers, is the most useful in the long run.

I believe that many researchers in neuroscience (and maybe you as a reader) initially started to work as neuroscientists not because they wanted to be famous or successful or well-known but because they wanted to understand how the brain works. So if you want to take this seriously, write for yourself.