In a living animal, the brain is moving up and down in the skull. This brain motion can be due to breathing, heartbeat, tongue movements, but also due to changes of posture or running. For each brain region and posture, specific brain movements are more likely to occur. For imaging of neuronal activity using two-photon microscopy through a window, the coverslip glass is usually gently pressed onto the brain to reduce motion. As a consequence, brain motion is typically smaller close to the glass surface and becomes more prominent for imaging deeper below the surface.

Why axial movement artifacts are bad

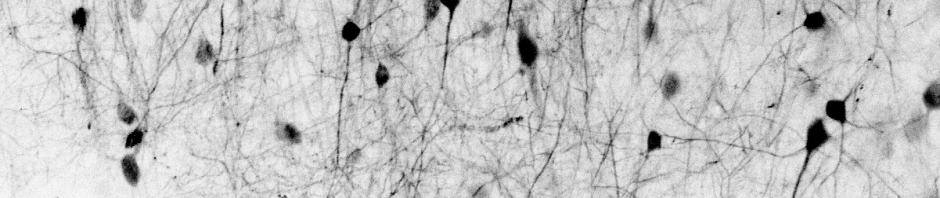

From the point of view of data analysis, there are two fundamentally different types of brain movements: Lateral movements (within the xy-imaging plane) and axial movement (in the axial z-direction). The first type can be corrected (except in the boundary region of the FOV) by shifting the image back to the “right place” within the plane. Axial movement, on the other hand, shifts the imaging focus to a different depth and cannot be corrected. From my experience, these axial motion artifacts are often underestimated and often ignored. But even if they are small (and barely visible by eye after non-rigid xy-motion correction), they might have an effect when an analysis averages across a lot of repetitions or “trials”. Especially for low imaging resolution and low signal-to-noise, it is often difficult to judge by eye whether something was due to z-motion or due to neuronal activity related to movement (or due to both). Imaging of small structures like axons or laterally-spreading dendrites is especially prone to movement artifacts, and there are many additional edge cases where brain movement might influence the analysis. Ideally, one would be able to correct for z-motion online during the recording. For example, when the brain moves up by 5 µm, the focus of the microscope would move up by the same amount, therefore imaging the same tissue despite the motion. Typically, z-motion of the brain is relatively slow, often below 20 Hz in mice. An online motion correction algorithm would therefore be required to work at a temporal responsiveness of, let’s say 40-50 Hz.

ECG-synchronized image acquisition

There have been several suggested methods to solve this problem. Paukert and Bergles (2012) developed a method to correct for only lateral (and not axial) movement, but the methods is still worth mentioning. They recorded the electro-cardiogram (ECG) and used the peak of the R-wave to reliable trigger image acquisition. Therefore, they did not really correct for heartbeat-induced motion but rather recorded such that brain motion was the same for all acquired imaging frames. Better imaging preparations (more pressure exerted onto the brain by the coverslip window) have made this approach less relevant for cortical imaging. However, for deeper imaging, e.g., with 3P microscopy, the stabilization through the coverslip is reduced. Therefore, Streich et al. (2021) used ECG-synchronized 3P scanning to improve image quality. They went a few steps further than Paukert and Bergles (2012) using the past heartbeats to predict – online with an FPGA – the next R-wave and to stop image acquisition around the R-wave window.

Correction of stereotypic axial motion with an ETL

A conceptually similar method has been developed by Chen et al. (2013). They also wanted to avoid motion artifacts induced by clearly defined motion-related events – not heartbeats in this case, but licking events. Depending on the brain region and the effort the mouse has to make, licking can cause huge motion artifacts. Chen et al. (2013) did not want to stop imaging during licking but instead wanted to correct the axial focus using a electrically tunable lens (ETL). They observed that licks consistently changed the focus of the imaging plane. They therefore used a lick sensor and fed its output to a corrective signal that moved the focus of the ETL in a calibrated manner. The idea is therefore based on the assumption that lick-induced movement is stereotyped. Therefore, one might have to recalibrate the correction algorithm for each mouse or for motion due to other parts of the body. However, it is, to my knowledge, the first attempt to really correct for axial brain motion in vivo.

It would be interesting to combine the recording of more diverse behaviors using a video camera with fast processing and with a predictive component as by Streich et al. (2021) to develop a more general brain movement predictor and corrector. For example, an algorithm could map behaviors to expected brain movements for 10 min and then perform recordings with perfectly predicted brain motion that can be anticipated and corrected by an ETL or another remote scanning device. Wouldn’t this be cool?

Using the sample surface as a reference

Another set of methods tries to image the brain and at the same time perform a scan of a reference object in the brain (a “guide star”), or the brain surface or the brain itself, in order to extract the desired corrective factor for scanning in real-time.

Laffray et al. (2011) used a reflective imaging modality to detect the position of the spinal cord (which is well known to move a lot!). In their approach, they only corrected for lateral brain motion, most likely because their microscope was not equipped with a z-scanning device. But it seems possible to generalize this method to axial movement correction as well. The limitation of this approach is that the brain surface is typically pressured by a coverslip and does not move so much – it is rather the deeper parts of the brain that move much more.

Scanning a reference object with an AOD scope

A technically more advanced method for real-time 3D movement correction was developed by Griffiths et al. (2020). In this study, the authors used a random-access AOD-based microscope, recording only small environments around single neurons. Therefore, post-hoc movement correction, also in the lateral direction, was not really an option. To address this problem, the authors developed a closed-loop system based on an FPGA to perform online motion correction. AODs (acousto-optic deflectors) are very fast scanners, so the scan speed is not an issue. The authors defined a 3D reference object that was scanned in parallel to the imaged neurons, and corrected the focus of the microscope according to movements of the reference object. This method seems to be very elegant. The only limitation that I can see is that it requires an AOD microscope – very few z-scanning techniques would be able to perform an ultra-fast z-scan as a reference (maybe TAG-lenses would be an option?). And AOD scopes have been long known to be very challenging to operate and align – although some companies seem to have managed to make the systems a bit more user-friendly during the last decade.

Scanning the brain with OCT-based imaging

Recently, I came across another paper about online movement correction, by Tucker et al., (2023). The authors used optical coherence tomography to measure brain motion and to online correct for it. In this paper, the authors present a method to correct for brain motion in x and y, not addressing the more relevant problem of z-motion (although this is discussed in depth as well). On a side-note, I noticed that corrections were applied by adding signals to the regular voltage signals used to drive the scan mirrors, reminding me of my simple solution a few years ago to add two analog signals with a summing amplifier. Anyway, a z-correction would be more difficult to implement in a standard microscope because it would require an existing z-scanning device (such as a remote z-scanner, a tunable lens, or a piezo attached to the objective). However, I found the idea compelling, and on paper the principle of OCT seems simple. An OCT is more or less an interferometer that uses infrared backscattered light to form an image. Imaging speed is high for the modern variants of OCT imaging, and the possible resolution seems to be around 1 μm. If you now have become interested in OCT, I can recommend this introduction, especially section 2.3 on “Signal formation in OCT”.

Open questions about OCT for online motion correction

Unfortunately, I have never seen or used an OCT microscope before. Therefore, I have no intuition how difficult it would be to achieve high-resolution OCT imaging. How does an OCT volumetric image of a mouse brain look like? Which structures in the brain generate the contrast? Is there any contrast? How long does it take to acquire such an image with sufficient contrast at high resolution? What is the price tag of a spectral-domain OCT system? Is it more or less difficult to build than e.g. a two-photon microscope?

I have the impression that the study by Tucker et al. (2023) only scratches the surface of what is possible, and that OCT imaging could be combined with 2P imaging without compromising excitation or detection pathways. If you are an expert in this technique and happen to meet me at the next conference, I’d be interested in knowing more!

P.S. Did I miss an interesting paper on this topic? Let me know!

[Update 2023-09-10: Also check out the comment below by Lior Golgher, who brings up additional interesting papers, including approaches to perform volumetric imaging in 3D, which enables post-hoc motion correction in the axial direction or the selction of a more motion-robust selection of an extended 3D-ROI.]

.

.

References

Chen, J.L., Pfäffli, O.A., Voigt, F.F., Margolis, D.J., Helmchen, F., 2013. Online correction of licking-induced brain motion during two-photon imaging with a tunable lens. J. Physiol. 591, 4689–4698. https://doi.org/10.1113/jphysiol.2013.259804

Griffiths, V.A., Valera, A.M., Lau, J.Y., Roš, H., Younts, T.J., Marin, B., Baragli, C., Coyle, D., Evans, G.J., Konstantinou, G., Koimtzis, T., Nadella, K.M.N.S., Punde, S.A., Kirkby, P.A., Bianco, I.H., Silver, R.A., 2020. Real-time 3D movement correction for two-photon imaging in behaving animals. Nat. Methods 1–8. https://doi.org/10.1038/s41592-020-0851-7

Laffray, S., Pagès, S., Dufour, H., Koninck, P.D., Koninck, Y.D., Côté, D., 2011. Adaptive Movement Compensation for In Vivo Imaging of Fast Cellular Dynamics within a Moving Tissue. PLOS ONE 6, e19928. https://doi.org/10.1371/journal.pone.0019928

Paukert, M., Bergles, D.E., 2012. Reduction of motion artifacts during in vivo two-photon imaging of brain through heartbeat triggered scanning. J. Physiol. 590, 2955–2963. https://doi.org/10.1113/jphysiol.2012.228114

Streich, L., Boffi, J.C., Wang, L., Alhalaseh, K., Barbieri, M., Rehm, R., Deivasigamani, S., Gross, C.T., Agarwal, A., Prevedel, R., 2021. High-resolution structural and functional deep brain imaging using adaptive optics three-photon microscopy. Nat. Methods 18, 1253–1258. https://doi.org/10.1038/s41592-021-01257-6

Tucker, S.S., Giblin, J.T., Kiliç, K., Chen, A., Tang, J., Boas, D.A., 2023. Optical coherence tomography-based design for a real-time motion corrected scanning microscope. Opt. Lett. 48, 3805–3808. https://doi.org/10.1364/OL.490087

Hi Peter! What’s up?

Thanks for this excellent treatise!

First, I suggest you add the following references [1-4], especially [1]. You might also want to have a quick look at pages 55-56 of my doctoral thesis [5], where I acquired continuous volumetric imagery of longitudinal neuronal dynamics using a TAG lens. Figures 4.7a and 4.8 suggest that selecting a sufficiently large volume of interest surrounding each cell body can improve signal fidelity, even if the student is too lazy or dumb to apply 3D motion correction. Moreover, timelapse continuous 3D imagery can always be reanalyzed by better motion correction algorithms (and students) as they become available over time, whereas AOD-based or multi-planar imagery is limited by the compromises made during data acquisition.

On the flip side, TAG lenses offer a strictly sinusoidal axial trajectory. I hope Muller-Waller annular MEMS mirrors [6-7] will soon afford the trajectories we actually need, spending most of the duty cycle staring at the deeper portions of the imaged volumes.

Thanks,

Lior

[1] Stringer and Pachitariu 2019 https://doi.org/10.1016/j.conb.2018.11.005

[2] Akemann et al. 2022 https://doi.org/10.1038/s41592-021-01329-7

[3] Kong et al. 2015 https://doi.org/10.1038/nmeth.3476

[4] Kong et al. 2016 https://doi.org/10.1364%2FBOE.7.003686

[5] Golgher 2022 https://commons.wikimedia.org/w/index.php?title=File:Rapid_volumetric_imaging_of_numerous_neuro-vascular_interactions_in_awake_mammalian_brain.pdf&page=71

[6] Ersumo et al. 2020 https://doi.org/10.1038/s41377-020-00420-6

[7] Yalcin et al. 2022 https://doi.org/10.1109/JSSC.2022.3177360

Hi Lior,

Thanks a lot for these interesting additions! I will update the blog post to refer to your comment.

I found it interesting to read all references but especially your reference [4] (Kong et al., 2016) because they actually measured and quantified axial motion artifacts in the brain of running mice.

In the context of this volumetric imaging approach using for example TAG-lenses, one might also mention the alternative approach using Bessel beams; however, the Bessel beam approach of course does not allow to quantify axial motion artifacts afterwards. And Bessel beam scanning is of course worse in terms of excitation efficiency…

The annular MEMS mirror that you referred to (your refs [6-7]) sounds really interesting! Do you know when it might become available as a product to a user like myself?

And thanks for referring to your thesis, which is really impressive! The amount of technical expertise and problem-solving skill that went into this thesis must have been huge! (Btw, a few weeks ago I saw your work highlighted in a talk by Pablo Blinder, and I got the same impression of a lot of technical knowledge behind your contributions to the project.)

Peter

Hi Peter,

Thank you so much for your kind remarks on my doctoral project! We faced many technical challenges, some of them rather fascinating, and it took some five years to arrive at truly impressive 4D imagery. Kong and Cui scooped me early along this journey with their seminal work (Kong et al. 2015). And while we hoped that PySight [1] and rPySight [2] will allow other labs to integrate TAG lenses into their own multi-photon setups, I’m not aware of any other lab actually using it as yet ):

I really like Kong et al. 2016, but other labs might argue that the 3D brain motion they reported is specific to their setup, and affected by the non-standard head post they devised. In my 4D datasets we often see small bright spots, seemingly similar to the granules of lipofuscin identified by Martin Lauritzen in adult mice. In principle we could use these granules as fiducial markers, measure 3D brain motion according to their motion, and correct for it. In practice I just threw some of my datasets at CaImAn and some other pipelines, and watched in agony as they tried in vain to extract calcium transients from its sparse imagery, with and without binning and motion correction.

Section 4.1.3 of my thesis relates to Na Ji’s with Bessel beams [3]. Bessel beam microscopy is resilient to axial drift, but offer a limited axial range, is ill-suited for dense GECI expression, and does not allow one to track how waves of vasodilation propagate upwards along penetrating arteries. I also argued that a”transient increase in hematocrit or a local aggregation of red blood cells will reduce the amount of photons collected off of a given vessel segment, thereby weakening the coupling between vascular brightness and vascular volume”. However, I did not back this assertion with an explicit analysis of brightness vs. calculated vascular volume.

As for the annual MEMS mirrors, I wrote to Rikky Muller on October 2022 but she was called up for jury service (facepalm). Following up on her last December yielded no response. But I think Laura or Rikky will likely be more responsive if you contact them yourself. Let me know!

Until their technology matures, I plan to integrate a customized Mitutoyo TAG lens [4] into one or two of our multi-photon microscopes in 2024. Unlike our earlier TAG-related works, it will involve passing back and forth through the lens, a-la Kong et al. 2015. The outstanding question is whether their driver be able to stabilize the lens at the laser powers needed for two-photon microscopy, which are higher than specified by Mitutoyo for this lens model. They also offer a high power lens model, but its weaker optical power translates to insufficient axial range.

Enjoy your weekend,

Lior

[1] https://doi.org/10.1364/OPTICA.5.001104

[2] https://doi.org/10.1117/1.nph.9.3.031920

[3] https://commons.wikimedia.org/w/index.php?title=File:Rapid_volumetric_imaging_of_numerous_neuro-vascular_interactions_in_awake_mammalian_brain.pdf&page=62

[4] https://www.mitutoyo.com/webfoo/wp-content/uploads/TAGLENS_2277-1.pdf

Thanks for your reply about MEMS mirrors and your comments about Bessel beam imaging of vessels and the TAG lenses! For the bright spots, I sometimes see similar things after virus injection; neurons or other cells compartmentalize these fluorophores into very small comportments and make them appear as bright spots (at least that is my interpretation). This green fluorescence can be distinguished from lipofuscin-like spots because lipofuscin would also appear with similar brightness when using e.g. a red instead of a green emission filter.