Incremental Mutual Information: A New Method for Characterizing the Strength and Dynamics of Connections in Neuronal Circuits is a 2010 paper by A. Singh and N. Lesica in PLoS Computational Biology that describes a method which can be used as an alternative to correlation analysis for some cases.*

What is the promise of Incremental Mutual Information (IMI), compared to correlation analysis and correlation functions? First, similar to mutual information, which I have discussed before, it also considers non-linear dependencies of neuronal activities. Second, “it has the potential to disambiguate statistical dependencies that reflect the connection between neurons from those caused by other sources (e.g. shared inputs or intrinsic cellular or network mechanisms) provided that the dependencies have appropriate timescales” (taken from the abstract). This sounds interesting, but we have to consider ‘appropriate timescales’ in more detail later.

How does this method work? It works with entropy (reduction of uncertainty). In mutual information, we have calculated how the uncertainty of the activity of neuron X is reduced by knowing the activity of neuron Y at the same time point (or at a certain delay). Incremental mutual information calculates 1) how the uncertainty of the activity of neuron X is reduced by knowing what happens around the time points considered for neurons X and Y and 2) how the uncertainty is reduced by knowing the above AND by knowing the activity of neuron Y in the considered time-bin. The difference is called incremental mutual information. Simply put, the method concentrates on a certain time bin in neuron Y and asks which information comes from this time bin and not from the others neighboring time bins. (The technical aspects become clearer when you have a look at figure 1 of the paper.)

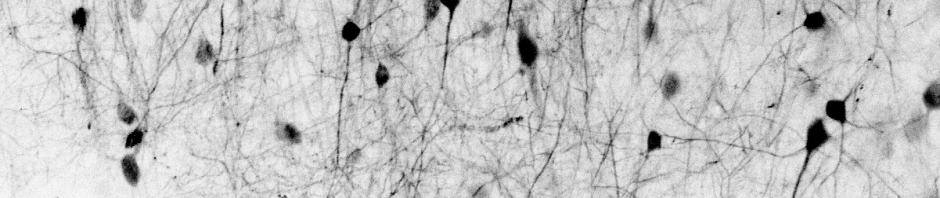

From that follows that the activity of interest has to have the same temporal extent as a time bin. Additionally, the ‘shared inputs’, which lead to correlations but which are not due to internal connectivity, have to change on a time scale slower than a time bin. This means that the time bin has to be chosen such that the slow external common influence does not change from one bin to another, and that the opposite is true for the internal activity. Unfortunately, this is seldom the case. In the paper, they use a slowly varying visual input (a LED lamp), with some neuron pairs from retina and LGN being recorded: the firing rate codes for intensity. For other, higher brain areas like the hippocampus, cortex or any other non-primary sensory region, this is rarely or almost never the case, since the input is simply more complex.

Another drawback of the method is that it involves a lot of conditioned probabilities. Therefore, an experimental recording has to be very long in order to give satisfactory results (i.e., in order to cover all these conditioned probabilities with data). In the experiments used in the paper (electrophysiological paired recordings), the experiment’s duration is 7 min. For the simulations in the paper, time traces with 2^20 time bins are used (maybe overkill?).

Moreover, in the discussion of the paper, it is mentioned that due to mathematical reasons it would be difficult and not practical to change the algorithm from treating neuron pairs to a big number of neurons. Ok.

The paper also mentions a different, already known method to deal with external inputs. This method uses several trials and works with correlations between e.g. trial 1 of neuron X and trial 2 of neuron Y, assuming that the external input remains the same, but the internal network activity changes. Google for “shift-predictor” if you want to know more about that.

To sum it up: for special cases, this method might be working. However, I think it is only applicable if you know already what you want and if you’re only looking for a mean to quantify your observation. But with a new data set which shows nothing interesting at first glance, in my opinion incremental mutual information won’t be likely to point out the hidden message. First, it needs a lot of data. Second, separation of timescales for external and internal signal is a severe condition. Third, the time bin size has to be chosen wisely and according to the activity transient times (change of membran potential, change of firing rate, whatever…). These are unknown for real experiments, making everything really hard. Overall, this method is interesting in theory, but I would only apply it for very specialized cases.

*) The paper is accompanied by a small Matlab program which uses a toolbox which in turn is not maintained as of now. N. Lesica was so friendly to send me the missing toolbox via mail. However, as I’m working under a system where I can’t easily compile MEX-files (Ubuntu 13.04, Matlab2012b; Update: this issue can be solved), I wasn’t able to check it out myself. Anyway, the paper’s code is shorter than 200 lines, well-described, and can be easily understood and modified.

Update 2014-03-22: Finally, I managed to compile the MEX-files and am therefore able to use Lesica’s program. It turned out that my data set (for C. elegans neuronal activity, as shown before, link) is too small in order to get something which can be discerned from noise. Additionally, I have the feeling that my data are not appropriate for IMI, as possible causal effects are on a the same timescale as the temporal extent of activity of the single neuron. Finally, when I analyzed the data, if I consider only two neurons, I have to play with a) time binning of the data b) the time lag to be considered for analysis. Imagine the parameter space you have to deal with when considering 16 neurons or more: if there are no strikingly clear effects and the recording time is not very extended, it is impossible to find these effects. Sidenote: The Matlab program is well-written, easy to understand. It works out of the box, when you just type something like

calcIMI(Activity_Trace{neuron1},Activity_Trace{neuron2},-5:5,2,1,0,0,1);

with the delays considered being -5:5 time bins, and Activity_Trace being your own data. This is nice and easy to test out, as it should be.