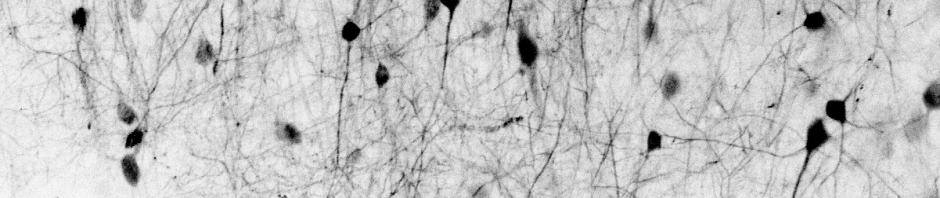

Calcium imaging is used to record the activity of neurons in living animals. Often, these activity patterns are analyzed after the experiments to investigate how the brain works. Alternatively, it is also possible to extract the activity patterns in real time, decode them and control a device or computer with them. Such brain-computer-interface (BCI) or closed-loop paradigms have one important limiting factor: the delay between the neuronal activity and the control that this activity exerts over the device or computer.

For calcium imaging, this delay comprises the time to record the calcium images from the brain, but it is also limited by the slowness of calcium indicators. How are calcium indicators limiting such an online processing step in practice? And how is this limitation potentially mitigated by the family of GCaMP8 indicators, which have been shown to exhibit faster rise times than previous genetically indicators (<10 ms)?

We addressed this question using a supervised algorithm for spike inference (CASCADE), which extracts spiking information from calcium transients. We slightly redesigned the algorithm such that it only has access to a small fraction of the time after the time point of interest:

This modification of the algorithm was very simple since it is a simple 1D convolutional network, and we simply shifted the receptive field in time very slightly. The time defined in the scheme, which we call “integration time”, determines the delay for closed-loop application like BCI paradigms for calcium imaging. To achieve a very good performence in inferring the spiking activity from calcium signals, an integration time of 30-40 ms was required for GCaMP8 and 50-150 ms for GCaMP6.

The CASCADE models trained for online spike inference are available online on our GitHub repository. The model names are starting with an “Online”, are listed as usual in this text file, and can be used as any other CASCADE models. For example, you can perform spike inference with your normal CASCADE model, and then perform the same spike inference with an “online” model, which will give you an impression how well the model performs. The only difference is that the “online” model takes only a few time points from the future, while the regular CASCADE model uses typically 32 time points from the future.

What does “a few time points” mean? Let’s look at a model’s name to break this down, for example the model Online_model_30Hz_integration_100ms_smoothing_25ms_GCaMP8. This model is trained for calcium imaging data acquired at 30 Hz with the calcium indicators GCaMP8 (the ground truth consisted of all GCaMP8 variants), with a smoothing of 25 ms. The crucial parameter is the integration time, here indicated as 100ms. This means that the model uses 100 ms from the future, which is 3 frames for the 30 Hz model.

If you are not sure which model to select for your application, or if you need another pretrained model for online spike inference, just drop me an email or open an issue for the GitHub repository.

For more details about the analysis and how the best choice of integration time for online spike inference depends on the noise levels of the calcium imaging recordings or potentially also other conditions such as calcium indicator induction methods or temperature, check out our recent preprint, where we also analyzed several other aspects of spike inference with a specific focus on GCaMP8: Spike inference from calcium imaging data acquired with GCaMP8 indicators.